When and why did coding theory arise. Theory of coding. Types of coding. Coding. Basic concepts

Coding theory - the study of the properties of codes and their suitability for achieving a given goal. Encoding information is the process of its transformation from a form convenient for direct use into a form convenient for transmission, storage, automatic processing and preservation from unauthorized access. The main problems of coding theory include the issues of one-to-one coding and the complexity of implementing a communication channel under given conditions. In this regard, coding theory mainly considers the following areas: data compression, forward error correction, cryptography, physical coding, error detection and correction.

Format

The course consists of 10 academic weeks. To successfully solve most of the tasks from the tests, it is enough to master the material told in the lectures. The seminars also deal with more complex tasks that can interest a listener who is already familiar with the basics.

Course program

- Alphabetical coding. Sufficient conditions for unambiguous decoding: uniformity, prefix, suffix. Recognition of uniqueness: Markov's criterion. An estimate of the length of an ambiguously decodable word.

- Kraft-McMillan inequality; the existence of a prefix code with a given set of word lengths; corollary of the universality of prefix codes.

- Minimum Redundancy Codes: Problem Statement, Huffman's Reduction Theorem.

- The task of correcting and detecting errors. Geometric interpretation. Error types. Metrics of Hamming and Levenshtein. code distance. Main tasks of the theory of error-correcting codes.

- Varshamov-Tenengolts codes, algorithms for correcting single errors of dropping out and inserting symbols.

- The simplest bounds for parameters of substitution error-correcting codes: spherical packing bounds, Singleton bounds, Plotkin bounds.

- Embedding of metric spaces. Lemma on the number of vectors in Euclidean space. Elias-Bassalygo border.

- Line codes. Definitions. Generating and checking matrices. Relationship between code distance and check matrix. Varshamov-Gilbert border. systematic coding. Syndrome decoding. Hamming codes.

- Remaining code. Greismer-Solomon-Stiffler boundary.

- The complexity of the problem of decoding linear codes: NCP problem (problem about the nearest code word).

- Reed-Solomon codes. Berlekamp-Welch decoding algorithm.

- Reed-Muller codes: code distance, majority decoding algorithm.

- Variants of generalizations of the Reed-Muller construction. Lemma of Lipton-DeMillo-Schwartz-Zippel. The concept of algebrogeometric codes.

- Extender Graphs. Probabilistic proof of the existence of expanders. Codes based on bipartite graphs. Code distance of codes based on expanders. Sipser-Spielman decoding algorithm.

- Shannon's theorems for a probabilistic channel model.

- Error-correcting code applications. Randomized protocol in communication complexity. McEliece cryptoscheme. Homogeneous (pseudo-random) sets based on codes, their applications to derandomization in the MAX-SAT problem.

From Wikipedia, the free encyclopedia

coding theory- the science of the properties of codes and their suitability for achieving the goal.

General information

Encoding is the process of converting data from a form convenient for direct use into a form convenient for transmission, storage, automatic processing and preservation from unauthorized access. The main problems of coding theory include the issues of one-to-one coding and the complexity of implementing a communication channel under given conditions:86. In this regard, coding theory mainly considers the following areas:18:

Data compression

Forward Error Correction

Cryptography

Cryptography (from other Greek. κρυπτός - hidden and γράφω - I write), this is a field of knowledge about methods for ensuring confidentiality (the impossibility of reading information to outsiders), data integrity (the impossibility of imperceptibly changing information), authentication (authentication of authorship or other properties of an object), as well as the impossibility of refusing authorship

04/04/2006 Leonid Chernyak Category:Technologies

"Open Systems" The creation of computers would be impossible if, simultaneously with their appearance, the theory of signal coding would not have been created. Coding theory is one of those areas of mathematics that significantly influenced the development of computing.

"Open Systems"

The creation of computers would have been impossible if, simultaneously with their appearance, the theory of signal coding had not been created.

Coding theory is one of those areas of mathematics that has markedly influenced the development of computing. Its scope extends to data transmission over real (or noisy) channels, and the subject is to ensure the correctness of the transmitted information. In other words, it studies how best to pack the data so that after signaling, useful information can be extracted from the data reliably and easily. Sometimes coding theory is confused with encryption, but this is not true: cryptography solves the inverse problem, its goal is to make it difficult to extract information from data.

The need to encode data was first encountered more than a hundred and fifty years ago, shortly after the invention of the telegraph. The channels were expensive and unreliable, which made the task of minimizing the cost and increasing the reliability of telegram transmission urgent. The problem has been exacerbated by the laying of transatlantic cables. Since 1845, special code books have come into use; with their help, telegraphists manually “compressed” messages, replacing common word sequences with shorter codes. At the same time, to check the correctness of the transfer, parity began to be used, a method that was also used to check the correctness of the input of punched cards in computers of the first and second generations. To do this, a specially prepared card with a checksum was inserted into the last input deck. If the input device was not very reliable (or the deck was too large), then an error could occur. To correct it, the input procedure was repeated until the calculated checksum matched the amount stored on the card. Not only is this scheme inconvenient, it also misses double faults. With the development of communication channels, a more effective control mechanism was required.

The first theoretical solution to the problem of data transmission over noisy channels was proposed by Claude Shannon, the founder of statistical information theory. Shannon was a star of his time, he was one of the US academic elite. As a graduate student at Vannevar Bush, in 1940 he received the Nobel Prize (not to be confused with the Nobel Prize!), awarded to scientists under the age of 30. While at Bell Labs, Shannon wrote "A Mathematical Theory of Message Transmission" (1948), where he showed that if the bandwidth of the channel is greater than the entropy of the message source, then the message can be encoded so that it will be transmitted without undue delay. This conclusion is contained in one of the theorems proved by Shannon, its meaning boils down to the fact that if there is a channel with sufficient bandwidth, a message can be transmitted with some time delays. In addition, he showed the theoretical possibility of reliable transmission in the presence of noise in the channel. The formula C = W log ((P+N)/N), carved on a modest monument to Shannon, installed in his hometown in Michigan, is compared in value with Albert Einstein's formula E = mc 2 .

Shannon's work gave rise to a lot of further research in the field of information theory, but they had no practical engineering application. The transition from theory to practice was made possible by the efforts of Richard Hamming, Shannon's colleague at Bell Labs, who gained fame for discovering a class of codes that came to be called "Hamming codes." There is a legend that the inconvenience of working with punched cards on the Bell Model V relay calculating machine in the mid-40s prompted the invention of their Hamming codes. He was given time to work on the machine on weekends when there were no operators, and he himself had to fiddle with the input. Be that as it may, but Hamming proposed codes capable of correcting errors in communication channels, including data transmission lines in computers, primarily between the processor and memory. Hamming codes became evidence of how the possibilities indicated by Shannon's theorems could be realized in practice.

Hamming published his paper in 1950, although internal reports date his coding theory to 1947. Therefore, some believe that Hamming, not Shannon, should be considered the father of coding theory. However, in the history of technology it is useless to look for the first.

It is only certain that it was Hamming who first proposed "error-correcting codes" (Error-Correcting Code, ECC). Modern modifications of these codes are used in all data storage systems and for the exchange between the processor and RAM. One of their variants, Reed-Solomon codes, are used in CDs, allowing recordings to be played without squeaks and noises that could cause scratches and dust particles. There are many versions of codes based on Hamming, they differ in coding algorithms and the number of check bits. Such codes have acquired particular importance in connection with the development of deep space communications with interplanetary stations, for example, there are Reed-Muller codes, where there are 32 control bits for seven information bits, or 26 for six.

Among the latest ECC codes, LDPC (Low-Density Parity-check Code) codes should be mentioned. In fact, they have been known for about thirty years, but special interest in them was discovered precisely in recent years, when high-definition television began to develop. LDPC codes are not 100% reliable, but the error rate can be adjusted to the desired level, while utilizing the channel bandwidth to the fullest. “Turbo Codes” are close to them, they are effective when working with objects located in deep space and with limited channel bandwidth.

The name of Vladimir Alexandrovich Kotelnikov is firmly inscribed in the history of coding theory. In 1933, in "Materials on Radio Communications for the First All-Union Congress on the Technical Reconstruction of Communications," he published the work "On the bandwidth? Ether? and? wires? The name of Kotelnikov, as an equal, is included in the name of one of the most important theorems in coding theory. This theorem defines the conditions under which the transmitted signal can be restored without loss of information.

This theorem has been called variously, including the "WKS theorem" (the abbreviation WKS is taken from Whittaker, Kotelnikov, Shannon). In some sources, both the Nyquist-Shannon sampling theorem and the Whittaker-Shannon sampling theorem are used, and in domestic university textbooks, simply the “Kotelnikov theorem” is most often found. In fact, the theorem has a longer history. Its first part was proved in 1897 by the French mathematician Emile Borel. Edmund Whittaker contributed in 1915. In 1920, the Japanese Kinnosuki Ogura published corrections to Whittaker's research, and in 1928 the American Harry Nyquist refined the principles of digitization and analog signal reconstruction.

Claude Shannon(1916 - 2001) from his school years showed equal interest in mathematics and electrical engineering. In 1932, he entered the University of Michigan, in 1936 - at the Massachusetts Institute of Technology, from which he graduated in 1940, receiving two degrees - a master's degree in electrical engineering and a doctorate in mathematics. In 1941, Shannon joined Bell Laboratories. Here he began to develop ideas that later resulted in information theory. In 1948, Shannon published the article "Mathematical Theory of Communication", where the basic ideas of the scientist were formulated, in particular, the determination of the amount of information through entropy, and also proposed a unit of information that determines the choice of two equally probable options, that is, what was later called a bit . In 1957-1961, Shannon published works that proved the throughput theorem for noisy communication channels, which now bears his name. In 1957, Shannon became a professor at the Massachusetts Institute of Technology, from where he retired 21 years later. On a "well-deserved rest" Shannon devoted himself completely to his old passion for juggling. He built several juggling machines and even created a general theory of juggling.

Richard Hamming(1915 - 1998) began his education at the University of Chicago, where he received a bachelor's degree in 1937. In 1939 he received a master's degree from the University of Nebraska and a doctorate in mathematics from the University of Illinois. In 1945, Hamming began working on the Manhattan Project, a massive government research effort to build the atomic bomb. In 1946, Hamming joined Bell Telephone Laboratories, where he worked with Claude Shannon. In 1976, Hamming received a chair at the Naval Postgraduate School in Monterey, California.

The work that made him famous, a fundamental study of error detection and correction codes, was published by Hamming in 1950. In 1956, he was involved in the development of one of the early IBM 650 mainframes. His work laid the foundation for a programming language that later evolved into high-level programming languages. In recognition of Hamming's contribution to the field of computer science, the IEEE instituted a Distinguished Service Medal for Computer Science and Systems Theory named after him.

Vladimir Kotelnikov(1908 - 2005) in 1926 he entered the Electrical Engineering Department of the Moscow Higher Technical School named after N.E. Bauman (MVTU), but became a graduate of the Moscow Power Engineering Institute (MPEI), which separated from the MVTU as an independent institute. While studying in graduate school (1931-1933), Kotelnikov mathematically precisely formulated and proved the "reference theorem", which was later named after him. After graduating from graduate school in 1933, Kotelnikov, remaining teaching at the Moscow Power Engineering Institute, went to work at the Central Research Institute of Communications (TsNIIS). In 1941, V. A. Kotelnikov formulated a clear position on the requirements that a mathematically indecipherable system should satisfy and a proof was given of the impossibility of deciphering it. In 1944, Kotelnikov took the position of professor, dean of the radio engineering faculty of MPEI, where he worked until 1980. In 1953, at the age of 45, Kotelnikov was immediately elected a full member of the USSR Academy of Sciences. From 1968 to 1990, V. A. Kotelnikov was also a professor, head of a department at the Moscow Institute of Physics and Technology.

The birth of coding theory

Theory of coding. Types of coding Basic concepts of coding theory Previously, coding tools played an auxiliary role and were not considered as a separate subject of mathematical study, but with the advent of computers, the situation changed radically. Coding literally permeates information technology and is a central issue in solving a variety of (practically all) programming tasks: ۞ representing data of an arbitrary nature (for example, numbers, text, graphics) in computer memory; ۞ protection of information from unauthorized access; ۞ Ensuring noise immunity during data transmission via communication channels; ۞ compression of information in databases. Coding theory is a branch of information theory that studies how messages can be identified with the signals that represent them. Task: Coordinate the source of information with the communication channel. Object: Discrete or continuous information supplied to the consumer through an information source. Encoding is the transformation of information into a formula convenient for transmission over a specific communication channel. An example of coding in mathematics is the coordinate method introduced by Descartes, which makes it possible to study geometric objects through their analytical expression in the form of numbers, letters and their combinations - formulas. The concept of coding means the transformation of information into a form convenient for transmission over a specific communication channel. Decoding is the restoration of the received message from the encoded form into a form accessible to the consumer.

Topic 5.2. Alphabetical coding In the general case, the coding problem can be represented as follows. Let two alphabets A and B be given, consisting of a finite number of characters: and. The elements of the alphabet are called letters. An ordered set in the alphabet A will be called a word, where n =l()=| |. , the number n shows the number of letters in the word and is called the length of the word, The empty word is denoted: For the word, the letter a1, is called the beginning, or prefix, of the word, the letter an is the ending, or postfix, of the word. , and Words can be combined. To do this, the prefix of the second word must immediately follow the postfix of the first, while in the new word they naturally lose their status, unless one of the words was empty. The compound of words and is denoted, the compound of n identical words is denoted, moreover. The set of all non-empty words of the alphabet A is denoted by A*: The set A is called the message alphabet, and the set B is called the coding alphabet. The set of words composed in the alphabet B will be denoted by B*.

Denote by F the mapping of words from the alphabet A to the alphabet B. Then the word is called the code of the word. Coding is a universal way of displaying information during its storage, transmission and processing in the form of a system of correspondences between message elements and signals with which these elements can be fixed. Thus, a code is a rule for the unambiguous transformation (i.e., a function) of a message from one symbolic representation form (initial alphabet A) to another (object alphabet B), usually without any loss of information. The process of converting F: A* B*→ words of the original alphabet A into alphabet B is called information coding. The process of converting a word back is called decoding. Thus, decoding is the inverse of F, i.e. F1. into a word Since for any encoding a decoding operation must be performed, the mapping must be invertible (a bijection). If |B|= m, then F is called mimic coding, the most common case is B = (0, 1) binary coding. It is this case that is considered below. If all code words have the same length, then the code is called uniform or block. Alphabetical (or letter-by-letter) coding can be specified by a code table. Some substitution will serve as a code or encoding function. Then where, . Such a letter-by-letter coding is denoted as a set of elementary codes. Alphabetical encoding can be used for any set of messages. Thus alphabetic encoding is the simplest and can always be entered on non-empty alphabets. . Many letter codes

EXAMPLE Let the alphabets A = (0, 1, 2, 3, 4, 5, 6, 7, 8, 9) B = (0, 1) be given. Then the coding table can be a substitution: . This is a BCD encoding, it is one-to-one and therefore decodeable. However, the schema is not one-to-one. For example, the set of six 111111 can match both the word 333 and 77, as well as 111111, 137, 3311, or 7111 plus any permutation. An alphabetic coding scheme is called prefix if the elementary code of one letter is not a prefix of the elementary code of another letter. An alphabetic coding scheme is said to be separable if any word composed of elementary codes decomposes into elementary codes in a unique way. Alphabetical encoding with a separable scheme allows decoding. It can be proved that the prefix scheme is separable. For an alphabetic coding scheme to be separable, the lengths of elementary codes must satisfy a relation known as Macmillan's inequality. Macmillan's inequality If the alphabetic coding scheme

is separable, then the following inequality holds. the elementary code of the letter a is the prefix of the elementary code of the letter b. Topic 5.3. Minimum redundancy coding In practice, it is important that message codes be as short as possible. Alphabetical coding is suitable for any messages, but if nothing is known about the set of all words of the alphabet A, then it is difficult to formulate the optimization problem precisely. However, in practice additional information is often available. For example, for messages presented in natural language, such additional information may be the probability distribution of the occurrence of letters in the message. Then the problem of constructing an optimal code acquires an exact mathematical formulation and a rigorous solution.

Let some separable alphabetic coding scheme be given. Then any scheme where the ordered set is a permutation of the ordered set will also be separable. In this case, if the lengths of the elementary set of codes are equal, then their permutation in the scheme does not affect the length of the encoded message. In the event that the lengths of elementary codes are different, then the length of the message code directly depends on which elementary codes correspond to which letters, and on the composition of the letters in the message. Given a specific message and a specific coding scheme, it is possible to choose such a permutation of codes, in which the length of the message code will be minimal. The algorithm for assigning elementary codes, in which the length of the fixed message code S will be minimal for a fixed scheme: ۞ sort the letters in descending order of the number of occurrences; ۞ sort elementary codes in ascending order of length; ۞ put the codes in accordance with the letters in the prescribed order. Let the alphabet and probabilities of occurrence of letters in the message be given:

Where pi is the probability of the appearance of the letter ai, and the letters with zero probability of appearing in the message are excluded and the letters are ordered in descending order of the probability of their occurrence message, which is designated and defined as EXAMPLE. For a separable alphabetic coding scheme A=(a,b), B=(0,1), under the probability distribution, the coding cost is, and under the probability distribution, the coding cost is

Topic 5.4. Huffman encoding This algorithm was invented in 1952 by David Huffman. Topic 5.5. Arithmetic coding As in the Huffman algorithm, everything starts with a table of elements and the corresponding probabilities. Suppose the input alphabet consists of only three elements: a1, a2 and a3, and at the same time P(a1) = 1/2 P(a2) = 1/3 P(a3) = 1/6 Suppose also that we need to encode sequence a1, a1, a2, a3 . Let us split the interval , where p is some fixed number, 0<р<(r1)/2r, а "мощностная" граница где Tr(p)=p logr(p/(r 1))(1р)logr(l p), существенно улучшена. Имеется предположение, чт о верхняя граница полученная методом случайного выбора кода, является асимптотически точной, т. е. Ir(п,[ рп])~пТ r(2р).Доказательство или опровержение этого предположения одна из центральны х задач теории кодирования. Большинство конструкций помехоустойчивых кодов являются эффективными, когда длин а пкода достаточновелика. В связи с этим особое значение приобретают вопросы, связанны е со сложностью устройств,осуществляющих кодирование и декодирование (кодера и деко дера). Ограничения на допустимый типдекодера или его сложность могут приводить к увел ичению избыточности, необходимой для обеспечениязаданной помехоустойчивости. Напр., минимальная избыточность кода в В n 2, для крого существует декодер,состоящий из регист

ra shift and one majority element and correcting one error, has an order (compare with (2)). As a mathematical encoder and decoder models are usually considered from a circuit of functional elements and complexity is understood as the number of elements in the circuit. For known classes of error-correcting codes, a study was made of possible algorithms for K. and D. and upper bounds on the complexity of the encoder and decoder were obtained. Some relationships are also found between the rate of coding, the noise immunity of coding, and the complexity of the decoder (see ). Another direction of research in coding theory is related to the fact that many results (for example, Shannon's theorem and bound (3)) are not "constructive", but are theorems on the existence of infinite sequences of (Kn) codes. In this regard, efforts are being made In order to prove these results in the class of such sequences of (Kn) codes, for kp there exists a Turing machine that recognizes that an arbitrary word of length l belongs to a set in time that has a slow order of growth with respect to l (e.g., llog l). Some new constructions and methods for deriving bounds developed in coding theory have led to significant progress in matters that at first glance are very far from the traditional problems of coding theory. Here we should point out the use of the maximum code with the correction of one error in the symptom-optimally optimal method of realizing the functions of the algebra of logic by contact circuits; the fundamental improvement of the upper bound for the packing density of a re-dimensional Euclidean space by equal balls; on the use of inequality (1) in estimating the complexity of the implementation by formulas of one class of functions of the algebra of logic. The ideas and results of coding theory find their further development in the problems of synthesis of self-correcting circuits and reliable circuits from unreliable elements. Lit .: Shannon K., Works on information theory and cybernetics, trans. from English, M., 1963; Berlekamp E., Algebraic coding theory, trans. from English, M., 1971; Peterson, W., Weldon, E., Error-correcting codes, trans. from English, 2nd ed., M., 1976; Discrete Mathematics and Mathematical Questions of Cybernetics, vol. 1, M., 1974, section 5; Bassalygo L. A., Zyablov V. V., Pinsker M. S., "Problems of information transmission", 1977, vol. 13, No. 3, p. 517; [In] V. M. Sidelnikov, "Mat. Sat.", 1974, v. 95, c. 1, p. 148 58. V. I. Levenshtein.

Mathematical encyclopedia. - M.: Soviet Encyclopedia. I. M. Vinogradov. 1977-1985. ALPHABETIC CODING COEUCLIDAN SPACE See also in other dictionaries: DECODING - see Coding and Decoding ... Encyclopedia of Mathematics Audio Coding - This article should be wikified. Please, format it according to the rules for formatting articles. The basis of sound coding using a PC is the process of converting air vibrations into electrical vibrations ... Wikipedia code images), performed according to the definition. rules, the totality of k ryh naz. cipher K., ... ... Philosophical Encyclopedia CODING OF INFORMATION - establishing a correspondence between message elements and signals, with the help of which these elements can be fixed. Let B be a set of message elements, A an alphabet with symbols, Let a finite sequence of symbols be called. in a word in ... ... Physical Encyclopedia OPTIMAL CODING - (in engineering psychology) (eng. optimal coding) the creation of codes that ensure maximum speed and reliability of receiving and processing information about an object controlled by a human operator (see Information Reception, Decoding). The problem of K. o. ... ... Big psychological encyclopedia DECODING (in engineering psychology) - (English decoding) the final operation of the process of receiving information by a human operator, consisting in re-encrypting the parameters characterizing the state of the control object, and translating them into the image of the controlled object ( see Coding ... ... Great psychological encyclopedia

Decoding - restoration of a message encoded by transmitted and received signals (see Coding) ... Dictionary of Economics and Mathematics CODING - CODING. One of the stages of speech generation, while "decoding" is the reception and interpretation, the process of understanding a speech message. See psycholinguistics... A new dictionary of methodological terms and concepts (theory and practice of teaching languages) CODING - (English coding). 1. Transformation of a signal from one energy form to another. 2. Transformation of one system of signals or signs into others, which is often also called "transcoding", "code change" (for speech, "translation"). 3. K. (mnemonic) ... ... Big psychological encyclopedia Decoding - This article is about code in information theory, for other meanings of this word, see code (disambiguation). The code is a rule (algorithm) for matching each specific message to a strictly defined combination of symbols (characters) (or signals). Also called a code... ... Optimal encoding The same message can be encoded in different ways. An optimally encoded code is one in which the minimum time is spent on message transmission. If the transmission of each elementary character (0 or 1) takes the same time, then the optimal code will be one that will have the minimum possible length. Example 1. Let there be a random variable X(x1,x2,x3,x4,x5,x6,x7,x8) having eight states with a probability distribution To encode an alphabet of eight letters with a uniform binary code, we need three characters: This 000, 001, 010, 011, 100, 101, 110, 111 To answer whether this code is good or not, you need to compare it with the optimal value, that is, determine the entropy

Having determined the redundancy L by the formula L=1H/H0=12.75/3=0.084, we see that it is possible to reduce the code length by 8.4%. The question arises: is it possible to compose a code in which there will be, on average, fewer elementary characters per letter. Such codes exist. These are ShannonFano and Huffman codes. The principle of constructing optimal codes: 1. Each elementary character must carry the maximum amount of information, for this it is necessary that the elementary characters (0 and 1) in the encoded text occur on average equally often. Entropy in this case will be maximum. 2. It is necessary for the letters of the primary alphabet, which have a higher probability, to be assigned shorter code words of the secondary alphabet.

To analyze various sources of information, as well as the channels of their transmission, it is necessary to have a quantitative measure that would make it possible to estimate the amount of information contained in the message and carried by the signal. Such a measure was introduced in 1946 by the American scientist C. Shannon.

Further, we assume that the source of information is discrete, giving out a sequence of elementary messages (i,), each of which is selected from a discrete ensemble (alphabet) a, a 2 ,...,d A; To is the volume of the alphabet of the information source.

Each elementary message contains certain information as a set of information (in the example under consideration) about the state of the considered information source. To quantify the measure of this information, its semantic content, as well as the degree of importance of this information for its recipient, is not important. Note that prior to receiving a message, the recipient always has uncertainty as to which message I am. from among all possible will be given to him. This uncertainty is estimated using the prior probability P(i,) of the transmission of the message i,. We conclude that an objective quantitative measure of information contained in an elementary message of a discrete source is set by the probability of choosing a given message and determines cc as a function of this probability. The same function characterizes the degree of uncertainty that the recipient of information has regarding the state of the discrete source. It can be concluded that the degree of uncertainty about the expected information determines the requirements for information transmission channels.

In general, the probability P(a,) the choice of the source of some elementary message i, (hereinafter we will call it a symbol) depends on the symbols chosen earlier, i.e. is a conditional probability and will not coincide with the a priori probability of such a choice.

Tim that ^ P(a:) = 1, since all i form a complete group of events

gyi), and the choice of these symbols is carried out using some functional dependence J(a,)= P(a,) = 1, if the choice of symbol by the source is a priori determined, J(a,)= a „ a P(a t ,a) is the probability of such a choice, then the amount of information contained in a pair of symbols is equal to the sum of the amount of information contained in each of the symbols i and i. This property of a quantitative measure of information is called additivity.

We believe that P(a,)- the conditional probability of choosing the character i, after all the characters preceding it, and P(a,,i,) is the conditional probability of choosing the symbol i; after i, and all preceding ones, but, given that P (a 1, a 1) \u003d P (a) P(i,|i y), the additivity condition can be written

We introduce the notation P(a) = P p P (ar) \u003d Q and rewrite condition (5.1):

We believe that R, O* 0. Using expression (5.2), we determine the form of the function (р (R). By differentiating, multiplying by R* 0 and denoting RO = R, write down

Note that relation (5.3) is satisfied for any R f O u^^O. However, this requirement leads to the constancy of the right and left sides of (5.3): Pq>"(P)= Ar"(/?) - To - const. Then we come to the equation Pc> "(P) = TO and after integration we get

Let's take into account that we will rewrite

Consequently, under the fulfillment of two conditions on the properties of J(a,), it turned out that the form of the functional dependence J(a,) on the probability of choosing a symbol a t up to a constant coefficient TO uniquely defined

Coefficient TO affects only the scale and determines the system of units for measuring the amount of information. Since ln[P] F 0, then it makes sense to choose To Os so that a measure of the amount of information J(a) was positive.

Having accepted K=-1, write down

It follows that the unit of the amount of information is equal to the information that an event has occurred, the probability of which is equal to Me. Such a unit of quantity of information is called a natural unit. It is more often assumed that TO= -, then

Thus, we came to a binary unit of the amount of information that contains a message about the occurrence of one of two equally probable events and is called a "bit". This unit is widespread due to the use of binary codes in communication technology. Choosing the base of the logarithm in the general case, we obtain

where the logarithm can be with an arbitrary base.

The additivity property of the quantitative measure of information makes it possible, on the basis of expression (5.9), to determine the amount of information in a message consisting of a sequence of characters. The probability of a source choosing such a sequence is taken taking into account all previously available messages.

The quantitative measure of the information contained in the elementary message a (, does not give an idea of the average amount of information J(A) issued by the source when one elementary message is selected a d

The average amount of information characterizes the source of information as a whole and is one of the most important characteristics of communication systems.

Let us define this characteristic for a discrete source of independent messages with the alphabet TO. Denote by ON THE) the average amount of information per character and is the mathematical expectation of a random variable L - the amount of information contained in a randomly selected character a

The average amount of information per symbol is called the entropy of the source of independent messages. Entropy is an indicator of the average a priori uncertainty when choosing the next character.

It follows from expression (5.10) that if one of the probabilities P(a) is equal to one (hence, all others are equal to zero), then the entropy of the information source will be equal to zero - the message is completely defined.

The entropy will be maximum if the prior probabilities of all possible symbols are equal TO, i.e. R(a() = 1 /TO, then

If the source independently selects binary symbols with probabilities P, = P(a x) and P 2 \u003d 1 - P, then the entropy per character will be

On fig. 16.1 shows the dependence of the entropy of a binary source on the a priori probability of choosing from two binary symbols, this figure also shows that the entropy is maximum at R, = R 2 = 0,5

1 o 1 dvd - and in binary units log 2 2 = 1-

Rice. 5.1. Entropy dependence at K = 2 on the probability of choosing one of them

Entropy of sources with equiprobable choice of symbols, but with different sizes of alphabets TO, increases logarithmically with growth TO.

If the probability of choosing symbols is different, then the entropy of the source drops I(A) relative to the possible maximum H(A) psh = log TO.

The greater the correlation between symbols, the less freedom to choose subsequent symbols and the less information a newly selected symbol has. This is due to the fact that the uncertainty of the conditional distribution cannot exceed the entropy of their unconditional distribution. Denote the entropy of the source with memory and alphabet TO across H(AA"), and the entropy of the source without memory, but in the same alphabet - through ON THE) and prove the inequality

By introducing the notation P(aa") for the conditional probability of choosing the symbol a,(/ = 1, 2, TO) assuming the symbol is previously selected ajij =1,2,TO) and omitting the transformations, we write without proof

which proves inequality (5.13).

Equality in (5.13) or (5.14) is achieved when

This means that the conditional probability of choosing a symbol is equal to the unconditional probability of choosing it, which is possible only for memoryless sources.

Interestingly, the entropy of text in Russian is 1.5 binary units per character. At the same time, with the same alphabet K= 32 with the condition of independent and equiprobable symbols H(A) tp = 5 binary ones per character. Thus, the presence of internal links reduced the entropy by approximately 3.3 times.

An important characteristic of a discrete source is its redundancy p and:

The redundancy of the information source is a dimensionless quantity within . Naturally, in the absence of redundancy p u = 0.

To transmit a certain amount of information from a source that does not have correlations between symbols, with an equal probability of all symbols, the minimum possible number of transmitted symbols /7 min: /r 0 (/7 0 R (L max)) is required. To transmit the same amount of information from a source with entropy (symbols are interconnected and unequally probable), an average number of symbols is required n = n„H(A) m JH(A).

A discrete source is also characterized by performance, which is determined by the number of symbols per unit of time v H:

If performance I(A) define in binary units, and time in seconds, then ON THE) - is the number of binary units per second. For discrete sources that produce stationary character sequences of sufficiently large length /?, the following concepts are introduced: typical and atypical sequences of source characters, into which all sequences of length P. All typical sequences NlMl (A) source at P-»oo have approximately the same probability of occurrence

The total probability of occurrence of all atypical sequences tends to zero. In accordance with equality (5.11), assuming that the probability of typical sequences /N rm (A), the entropy of the source is logN TIin (,4) and then

Consider the amount and speed of information transmission over a discrete channel with noise. Previously, we considered information produced by a discrete source in the form of a sequence of characters (i,).

Now suppose that the source information is encoded and represents a sequence of code symbols (b, (/ = 1,2,..T - code base), is consistent with a discrete information transmission channel, at the output of which a sequence of symbols appears

We assume that the encoding operation is one-to-one - by the sequence of characters (b,) one can uniquely restore the sequence (i,), i.e. by code symbols it is possible to restore the source information completely.

However, if we consider the escape characters |?. j and input symbols (/>,), then, due to the presence of interference in the information transmission channel, recovery is impossible. Entropy of the output sequence //(/?)

may be greater than the entropy of the input sequence H(B), but the amount of information for the recipient did not increase.

In the best case, one-to-one relations between input and output are possible and useful information is not lost; in the worst case, nothing can be said about the input symbols from the output symbols of the information transmission channel, useful information is completely lost in the channel.

Let us estimate the loss of information in a noisy channel and the amount of information transmitted over a noisy channel. We consider that the character was transmitted correctly if, with the transmitted character 6, it is received

symbol bj with the same number (/= j). Then for an ideal channel without noise, we write:

By symbol bj-at the channel output due to inequalities (5.21)

uncertainty is inevitable. We can assume that the information in the symbol b i not transmitted completely and part of it is lost in the channel due to interference. Based on the concept of a quantitative measure of information, we will assume that the numerical expression of the uncertainty that occurs at the output of the channel after receiving the symbol ft ; :

and it determines the amount of lost information in the channel during transmission.

Fixing ft . and averaging (5.22) over all possible symbols, we obtain the sum

which determines the amount of information lost in the channel when transmitting an elementary symbol over a channel without memory when receiving a symbol bj(t).

When averaging the sum (5.23) over all ft, we obtain the value Z?), which we denote by n(in/in- It determines the amount of information lost when transmitting one character over a memoryless channel:

where P^bjbjj- joint probability of an event that, when transmitted

symbol b. it will take the symbol b t .

H [w/ depends on the characteristics of the source of information on

channel input V and on the probabilistic characteristics of the communication channel. According to Shannon in statistical communication theory n(in/in is called channel unreliability.

Conditional entropy HB/B, entropy of a discrete source

at the channel input H(W) and entropy AND ^B) at its output cannot be

negative. In an interference-free channel, channel unreliability

n(v/v = 0. In accordance with (5.20), we note that H^v/v^

and equality takes place only when the input and output of the channel are statistically independent:

The output symbols do not depend on the input symbols - the case of a broken channel or very strong interference.

As before, for typical sequences, we can write

to say that in the absence of interference, its unreliability

Under the information transmitted on average over the channel J[ b/ per symbol we understand the difference between the amount of information at the channel input J(B) and information lost in the /? channel).

If the source of information and the channel are without memory, then

Expression (5.27) determines the entropy of the channel's output symbols. Part of the information at the output of the channel is useful, and the rest is false, since it is generated by interference in the channel. Let us note that n[v/ 2?) expresses information about the interference in the channel, and the difference i(d)-I(d/d) - useful information that has passed through the channel.

Note that the vast majority of sequences formed at the output of the channel are atypical and have a very small total probability.

As a rule, the most common type of interference is taken into account - additive noise. N(t); The signal at the output of the channel has the form:

For discrete signals, the equivalent noise, following from (5.28), has a discrete structure. Noise is a discrete random sequence, similar to the sequences of input and output signals. Let us denote the symbols of the alphabet of additive noise in a discrete channel as C1 = 0, 1,2, T- one). Conditional transition probabilities in such a channel

Because AND (^B/?) And (B) then, consequently, the information of the output sequence of the discrete channel #(/) relative to the input B(t) or vice versa And (B) - H ^ in / in) (5).

In other words, the information transmitted over the channel cannot exceed the information at its input.

If the channel input receives an average x k symbols in one second, then it is possible to determine the average information transfer rate over a channel with noise:

where Н(В) = V k J(B,B^ - source performance at the channel input; n (in / in) \u003d U to n (in, in) ~ unreliability of the channel per unit of time; H (B) = V k H^B^- the performance of the source formed by the output of the channel (giving out part of the useful and part of the false information); H ^ in / B ^ \u003d U to 1 / (in / in)- the amount of false information,

created interference in the channel per unit time.

The concepts of the amount and speed of information transmission over a channel can be applied to various sections of a communication channel. This may be the "encoder input - decoder output" section.

Note that, by expanding the section of the channel under consideration, it is impossible to exceed the speed on any of its component parts. Any irreversible transformation leads to loss of information. Irreversible transformations include not only the impact of interference, but also detection, decoding with codes with redundancy. There are ways to reduce the receive loss. This is "reception in general".

Consider the bandwidth of a discrete channel and the optimal coding theorem. Shannon introduced a characteristic that determines the maximum possible information transfer rates over a channel with known properties (noise) under a number of restrictions on the ensemble of input signals. This is the bandwidth of channel C. For a discrete channel

where the maximum is guarded by possible input sources V given Vk and the volume of the input character alphabet T.

Based on the definition of the throughput of a discrete channel, we write

Note that C = 0 with independent input and output (high noise level in the channel) and, accordingly,

in the absence of interference interference on the signal.

For binary symmetric channel without memory

Rice. 5.2.

Graph of the dependence of the capacity of the binary channel on the parameter R shown in fig. 5.2. At R= 1/2 channel bandwidth C = 0, conditional entropy

//(/?//?) = 1. Practical interest

graph represents at 0

Shannon's fundamental theorem on optimal coding is related to the concept of capacity. Its formulation for a discrete channel is as follows: if the performance of the message source ON THE) less than the bandwidth of channel C:

th there is a method of optimal coding and decoding, under which the probability of error or unreliability of the channel n[a!A j can be arbitrarily small. If

there are no such ways.

In accordance with Shannon's theorem, the finite value WITH is the limit value of the error-free information transfer rate over the channel. But for a noisy channel, the ways of finding the optimal code are not indicated. However, the theorem radically changed the views on the fundamental possibilities of information transmission technology. Before Shannon, it was believed that in a noisy channel it was possible to obtain an arbitrarily small error probability by reducing the information transfer rate to zero. This is, for example, an increase in communication fidelity as a result of character repetition in a memoryless channel.

Several rigorous proofs of Shannon's theorem are known. The theorem was proved for a discrete memoryless channel by random coding. In this case, the set of all randomly selected codes for a given source and a given channel is considered and the fact of the asymptotic approach to zero of the average probability of erroneous decoding over all codes is asserted with an unlimited increase in the duration of the message sequence. Thus, only the fact of the existence of a code that provides the possibility of error-free decoding is proved, however, an unambiguous coding method is not proposed. At the same time, in the course of the proof, it becomes obvious that while maintaining the equality of the entropies of the ensemble of the message sequence and the one-to-one corresponding set of code words used for transmission, the ensemble V additional redundancy should be introduced to increase the mutual dependence of the sequence of code symbols. This can only be done by expanding the set of code sequences from which code words are selected.

Despite the fact that the main coding theorem for noisy channels does not indicate unambiguous ways of choosing a particular code and they are also absent in the proof of the theorem, it can be shown that most of the randomly chosen codes, when encoding sufficiently long message sequences, slightly exceed the average probability of erroneous decoding . However, the practical possibilities of coding in long blocks are limited due to the difficulties in implementing memory systems and logical processing of sequences of a huge number of code elements, as well as an increase in the delay in the transmission and processing of information. In fact, of particular interest are the results that make it possible to determine the probability of erroneous decoding for finite durations P used code blocks. In practice, they are limited to moderate delay values and achieve an increase in the transmission probability with incomplete use of the channel bandwidth.

Selecting a GIS processing program

Selecting a GIS processing program Calculation and analysis of an electric circuit of an alternating current

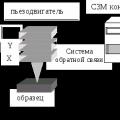

Calculation and analysis of an electric circuit of an alternating current Scanning probe microscope Current state and development of scanning probe microscopy

Scanning probe microscope Current state and development of scanning probe microscopy